Share this post

By Rainer Kocsis, Senior Analyst

In recent Pivotal Research white papers , we’ve highlighted some common market research mistakes and offered remedies to avoid them. On paper, the benefits of conducting error-free research are clear—but how do the consequences of error-ridden market research shake out in the real world?

Over the past century, organizations ranging from governments, private corporations, and other institutions have made their fair share of costly market research blunders. Sometimes, these are the result of firms not treating research as a priority, such as taking shortcuts in an attempt to save time and money or thinking that they already know all the answers. Usually, however, disastrous market research flops begin as innocent mistakes by well-intentioned researchers.

In this second installment of a series of articles, we will take a look at four notorious historical examples of data science gone wrong and discuss how you can avoid making those same mistakes. After all, to paraphrase Warren Buffet: “it’s good to learn from your own mistakes, but even better to learn from other people’s mistakes.”

You can download a PDF version of this piece here, or read on!

Gimmick or predicter?

The earliest public opinion polls were products of newspapers. The Harrisburg Pennsylvanian conducted the first informal straw poll during the 1824 US presidential election between Andrew Jackson and John Quincy Adams. These straw polls became a popular feature of 20th century magazine publishers, who used them as a gimmick to attract readers who would fill out mail-in ballots that included subscription offers. Over eighty straw polls were conduced in the 1924 presidential election, six of which were national. Newspapers began conducting straw polls on pressing issues of the day, such as prohibition, and then treated the results as a source of news.

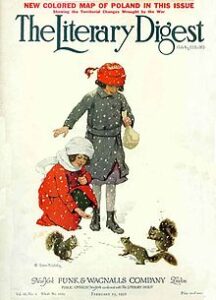

2.3 Million people can’t be wrong

In advance of the 1936 presidential election, the Literary Digest—a venerable, popular, and influential weekly general-interest news magazine with a large circulation that catered to an educated, well-off clientele—conducted an ambitious national survey of voter preference by mailing out 10 million postcard ballots to a list of its subscribers asking them to indicate their preference in the election.

Days before the election, the Digest predicted the Republican candidate—the mild-mannered, middle-of-the-road Kansan named Alf Landon—would be the likely winner against the incumbent Democratic president Franklin Delano Roosevelt. Returns indicated a 3-to-2 landslide victory for the Republican, with Landon carrying more than 30 states, 270 electoral votes, and 57 percent of the popular vote compared 43% and 161 electoral votes for Roosevelt. It should have been an easy win.

The massive straw poll tallied the voting preferences of more than 2,270,000 Americans. The Digest issued its predictions in an article boasting that the figures represented the opinions of “more than one in every five voters in our country” and that “the country will know within a fraction of one percent the actual popular vote of 40 million.” In the city of Chicago, every third registered voter received a ballot from the Digest. Even the Chairman of the Democratic National Committee, James Farley, declared that “any sane person cannot escape the implication of such a gigantic sampling of popular opinion… it is a poll fairly and correctly conducted.”

A “superlandslide” victory

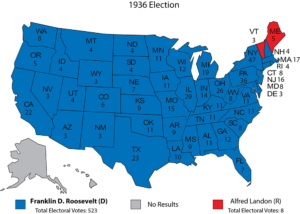

Perhaps you already know the actual election result. It was one of the most decisive political victories in US presidential history—something the newspapers called a “superlandslide”—for Roosevelt.

Indeed, Landon suffered a crushing defeat. Roosevelt nearly swept the country, carrying 46 of 48 states, while Landon won only two states: Maine and Vermont. The Democrat also commanded the popular vote by one of the largest margins in any presidential election: 62% for Roosevelt to Landon’s 38%. Roosevelt won a comfortable 523 electoral votes and Landon’s electoral vote count of eight remains tied for the record low for a major-party (Republican or Democratic) nominee since the 1850s.

The magnitude of the error destroyed the magazine’s credibility, and it went out of business within 18 months of the election. The backlash to the Digest incorrectly predicting the presidential election outcome also prompted the public to subsequently lose faith in polls and ushered in an era when newspapers temporarily shied away from highlighting poll results in their coverage.

The magnitude of the error destroyed the magazine’s credibility, and it went out of business within 18 months of the election. The backlash to the Digest incorrectly predicting the presidential election outcome also prompted the public to subsequently lose faith in polls and ushered in an era when newspapers temporarily shied away from highlighting poll results in their coverage.

The Digest faced competition from George Gallup, a relatively unknown advertising executive who conducted his own poll based on a telephone sample of just 50,000 people sourced from telephone directories. Gallup correctly predicted a win for Roosevelt, from which he achieved national recognition and enjoyed enormous publicity. The feat would establish Gallup as a “founding father” of public opinion research and cement the Gallup Poll as a staple among the most prominent polling organizations.

It is the prevailing view that the disastrous prediction of an Alf Landon victory is a landmark event in the history of survey research in general and polling in particular. It marks the demise of the straw poll, of which the Digest was the most conspicuous and well-regarded example, and the rise to prominence of the “scientific” poll. It has been used to illustrate the effects of poor sampling, what causes bad surveys, and how to recognize good ones. Subsequent studies in the social sciences discipline have shown that it is not necessary to poll millions of people when conducting a scientific survey; a much lower number, in the thousands or even hundreds, is adequate in most cases so long as they are appropriately chosen.

Digest-ing the Error

Did something happen that made people suddenly change their minds between filling out the Digest ballot and voting? No—the error was in the methods used by the Digest. The reliability of the estimate as measured by the margin of error is only valid if you have a random sample that mirrors the general population.

The Digest forgot one fact: the sample itself introduced bias into the study. At the time, people with magazine subscriptions did not represent a statistically accurate cross-section of American voters. Magazines were a luxury and therefore the people sampled were relatively affluent—and more likely to vote Republican than a typical voter of the time. Since their subscription list contained well-to-do folks who would mostly vote Republican, the sample was biased from the outset.

In research, bias is a systematic error in thinking that occurs when the researcher incorrectly interprets and processes the results, which has the effect of skewing conclusions based on those results. “President Alf” is an example of selection bias. The Digest made an error that resulted in heavy selection bias when they used their own subscription list as the sampling frame. Based on how the Digest’s 10 million subscribers were likely to vote, the huge sample size of 2.3 million was meaningless because the selection procedure was skewed from the beginning. If the research you’re doing has no predictive power, you may as well not do research at all.

In research, bias is a systematic error in thinking that occurs when the researcher incorrectly interprets and processes the results, which has the effect of skewing conclusions based on those results. “President Alf” is an example of selection bias. The Digest made an error that resulted in heavy selection bias when they used their own subscription list as the sampling frame. Based on how the Digest’s 10 million subscribers were likely to vote, the huge sample size of 2.3 million was meaningless because the selection procedure was skewed from the beginning. If the research you’re doing has no predictive power, you may as well not do research at all.

Selection bias is a common problem experienced when using a convenience sample—a form of data collection where the researchers are free to survey whoever is easiest to reach. Here in Canada, the Digest case got a reboot when the Canadian federal government abolished the mandatory long-form census questionnaire in 2010, replacing it with the voluntary National Household Survey. In 2011, when asked if repealing the long-form census would make it more difficult to obtain accurate data, (then) Federal Industry Minister Tony Clement said that high response rates effectively eliminate selection bias—Minister Clement would have done well to study the Digest case. The mandatory long-form questionnaire was reintroduced in 2016.

The Digest poll is also an example of non-response bias, a problem that occurs when responses represent only a subset of the population with a relatively intense interest in the subject at hand. In this case, one can imagine that the minority of anti-Roosevelt voters had stronger feelings about the election than the pro-Roosevelt majority. Poll respondents and nonrespondents favoured opposite candidates—in this case, Landon supporters were far more likely to fill out the postcard and send it in.

The takeaway

The Literary Digest poll of 1936 holds an infamous place in the history of survey research. It has gone down as possibly the worst opinion poll ever, and pollsters know it as a synonym for incredible and unprecedented humiliation. The backlash to the erroneous results was monumental, shattering the public’s faith in polls and causing the Digest to go bankrupt. But could another polling disaster like “President Alf” happen today? The surprising outcomes of US presidential elections in 2000 and 2016 remind us that, yes, pre-election polls can and do get it wrong.

Public opinion polling embarrassments are not rare, nor are all polling errors alike. Polling has a remarkably checkered record and the discipline is marred by plenty of storied controversies, flops, surprises, upsets, fiascos, and unforeseen landslides. The Digest’s error is the most memorable, but it is far from being the only opinion poll with a manifestly incorrect or confounding outcome.

Scholars commonly point out that the 1936 Gallup poll, which made telephone surveys the most pervasive and predominant tool used for probabilistic surveys, took place at a time when only roughly 40 percent of households had telephones. Many voters were still excluded by the ostensibly “scientific” Gallup poll by virtue of not owning a telephone. Today, telephone surveys are beginning to show signs of becoming obsolete.

Panel surveys bring about their own problems, however. “Professional respondents” can participate in 15 to 20 surveys per month and evidence suggests panel respondents may do a sloppy job of answering survey questions—thoughtlessly racing through so that they can “win” the survey and collect their financial reward with as little effort as possible. They also tend not to represent the general population well: a panel respondent is more likely to be white, uneducated, and either young or old but not middle-aged. But nearly every researcher has used panel surveys in order to reduce costs. The greater risk of inaccuracy is often seen as preferable to the significant cost increase of conducting a difficult and expensive “traditional” survey.

One thing is clear: collecting responses from random volunteers is easiest when the greatest possible range of people has an opportunity to respond.

A common solution to this challenge is to pull together information from a variety of sources. The extraordinary number of social media users could be one key to cheaper results without compromising accuracy. Another option could be the “event-based” survey. Who knows? Maybe there’s already an emerging technology that will cause a radical rethink of how researchers probe the public.

Wrap-up

Some methods of obtaining research participants are worse than others, and great care is needed when designing a sampling plan to ensure it is representative and unbiased. The Literary Digest poll of 1936 provides a prime example of how not to sample, and how bad sampling can go on to cause abject research (and business) failure.

Reaching people for market research studies has become harder than ever. Whether you are a marketer hoping to use surveys to maximize profit by bouncing ideas off potential clients and consumers, or a pollster taking the pulse of the masses, you need to be certain the information you collect from conducting research was obtained in a way that avoids mistakes.

If you’ve made it this far in the article, you know why it is so important to engage the professionals when undertaking market research. Choose a research partner who demonstrates considerable understanding and wisdom in sampling techniques, but who is also willing to lead, refine, and innovate for improved accuracy.

If you are interested in more engagement opportunities with Pivotal Research, please contact the author @ rkocsis@pivotalresearch.ca.

Related Posts

Tags: Market Research Mistakes